State Spaces & Uninformed Search

CS-188 Lecture 2

State Spaces and Search Problems

Given our agent’s current state,how can we arrive at a new state that satisfies its goals in the best possible way?

Elements of a search problem:

- A state space (all possible states)

- Available actions in each state

- A transition model(Output the next state when an action is taken st current state)

- An action cost

- A start state

- A goal test(A function that takes a state as input, and determines whether it is a goal state)

Difference between World State & Search State

- World State: All information

- Search State: Information necessary for planning

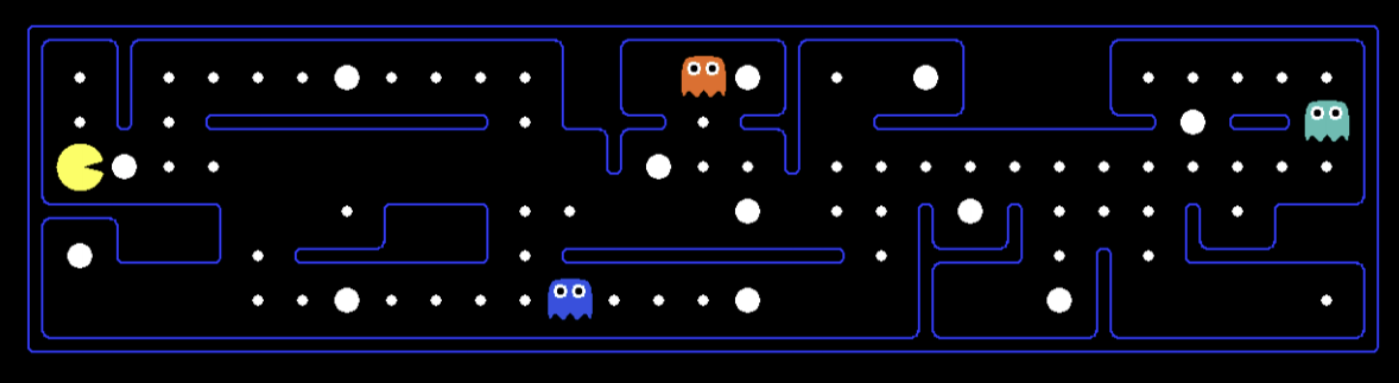

Pacman Example

- Target: Pathing

- Target: Eat all dots

State Space Size

Fundamental Counting Principle

n variable objects which can take on x1,…,xn different values respectively

Then the total number of states is x1·x2·…·xn

Configuration of Pacman

Pacman has 120 postions、4 directions、2 ghosts in 12positions、30 food pellets(can be eaten or not),total space size is 120⋅4⋅122⋅230

State Space Graphs and Search Trees

State Space Graphs

- Nodes: states

- Edges: actions

- Edge Weight: action cost

Too large to store in memory due to the large State Space Size.

each state is represented exactly once

Search Tree(typically used)

have no such restriction on the number of times a state can appear

Also too large.

Sol

We only store states we’re immediately working with.

Uninformed Search

The pseudocode:

1 | |

Judgements:

- Completeness

- Optimality

- Branching factor

- The maximun depth m

- The depth of the shallowest solution s

DFS

“Leftmost” Solution

BFS

Shallowest Solution

UCS – Uniform Cost Search

Select the lowest cost frontier node from the start node

The same thought of Dijkstra

If all the costs are not negative,then UCS can always find the lowest-cost solution.(If not then Bellman-Ford)